On Friday 22 September, many Londoners who regularly use Uber received an email. “As you may have heard,” it began, “the Mayor and Transport for London have announced that they will not be renewing Uber’s licence to operate in our city when it expires on 30 September.”

“We are sure Londoners will be as astounded as we are by this decision,” the email continued, with a sense of disbelief. It then pointed readers towards an online petition against this attempt to “ban the app from the capital.”

Oddly, the email was sent by a company that TfL have taken no direct action against, and referred to an app that TfL have made no effort (and have no power) to ban.

When two become one

If that last statement sounds confusing, then that’s because – to the casual observer – it is. This is because the consumer experience that is “Uber” is not actually the same as the companies that deliver it.

And “companies” is, ultimately, correct. Although most users of the system won’t realise it, over the course of requesting, completing and paying for their journey an Uber user in London actually interacts with two different companies – one Dutch, one British.

The first of those companies is Uber BV (UBV). Based in the Netherlands, this company is responsible for the actual Uber app. When a user wants to be picked up and picks a driver, they are interacting with UBV. It is UBV that request that driver be dispatched to the user’s location. It is also UBV who then collect any payment required.

At no point, however, does the user actually get into a car owned, managed or operated by UBV. That duty falls to the second, UK-based company – Uber London Ltd. (ULL). It is ULL who are responsible for all Uber vehicles – and their drivers – in London. Just like Addison Lee or any of the thousands of smaller operators that can be found on high streets throughout the capital, ULL are a minicab firm. They just happen to be one that no passenger has ever called directly – they respond exclusively to requests from UBV.

This setup may seem unwieldy, but it is deliberate. In part, it is what has allowed Uber to skirt the blurred boundary between being a “pre-booked” service and “plying-for-hire” (a difference we explored when we last looked at the London taxi trade back in 2015). It is also this setup that also allows Uber to pay what their critics say is less than their fair share’ of tax – Uber pays no VAT and, last year, only paid £411,000 in Corporation Tax.

The average Londoner can be forgiven for not knowing all of the above (commentators in the media, less so). In the context of the journey, it is the experience that matters, not the technology or corporate structure that delivers it. In the context of understanding the causes – and likely outcome – to the current licensing situation, however, knowing the difference between the companies that make up that Uber experience is important. Because without that, it is very easy for both Uber’s supporters and opponents to misunderstand what this dispute is actually about.

The raw facts

Uber London Ltd (ULL) are a minicab operator. This means they require a private hire operator’s licence. Licences last five years and ULL were last issued one in May 2012. They thus recently applied for its renewal.

ULL were granted a four-month extension to that licence this year. This was because TfL, who are responsible for regulating taxi services in London, had a number of concerns that ULL might not meet the required standard of operational practice that all private hire operators – from the smallest cab firm to Addison Lee – are required to meet. Issuing a four-month extention rather than a five-year one was intended to provide the time necessary to investigate those issues further.

On Friday 22 September, TfL announced that they believe ULL does not meet the required standard in the following areas:

- Their approach to reporting serious criminal offences.

- Their approach to how medical certificates are obtained.

- Their approach to how Enhanced Disclosure and Barring Service (DBS) checks are obtained.

- Their approach to explaining the use of Greyball in London – software that could be used to block regulatory bodies from gaining full access to the app and prevent officials from undertaking regulatory or law enforcement duties.

As a result, their application for a new licence has been denied.

ULL have the right to appeal this decision and can remain in operation until that appeal has been conducted. Similarly, if changes are made to their operational practices to meet those requirements to TfL’s satisfaction, then a new licence can be issued.

Put simply, this isn’t about the app.

So why does everyone think it is?

Washington DC, September 2012

“I know that you like to cast this as some kind of fight,” said Mary Cheh, Chair of the Committee on Transport and the Environment, “Do you understand that? I’m not in a fight with you.”

“When you tell us we can’t charge lower fares, offer a high-quality service at the best possible price, you are fighting with us.” Replied Travis Kalanick, Uber’s increasingly high profile (and controversial) CEO.

“You still want to fight!” Cheh sighed, throwing her hands in the air.

Back in San Francisco, Salle Yoo, Uber’s chief counsel, was watching in horror via webcast. Pulling out her phone, she began frantically texting the legal team sitting with Kalanick in the room:

Pull him from the stand!!!

It was too late. Kalanick had already launched into a monologue on toilet roll prices in Soviet Russia. He had turned what had been intended as a (relatively) amicable hearing about setting a base fare for Uber X services in the city into an accusation – and apparent public rejection by Kalanick – of an attempt at consumer price fixing.

The events that day, which are recounted in Brad Stone’s ‘The Upstarts’, were important. In hindsight, they marked the point where Uber shifted gears and not only started to aggressively move in on existing taxi markets, but also began to use public support as a weapon.

Cause and effect

Weirdly, one of the causes for that shift in attitude and policy was something London’s Black Cab trade had done.

Kalanick and fellow founder Garrett Camp had launched Uber in 2008 with a simple goal – to provide a high-quality, reliable alternative to San Francisco’s notoriously awful taxis.

What’s important to note here is that neither man originally saw Uber as a direct price-for-price rival to the existing LA taxi trade. LA, like many cities in the USA, utilised a medallion system to help regulate the number of taxi drivers in operation at any one time. Over the years, the number of medallions available had not increased to match rising passenger demand.

Camp – who had moved to LA after the sale of his first startup, StumbleUpon, became increasingly frustrated at his inability to get around town. Then Camp discovered that town car licences (for limousine services) weren’t subject to the medallion limits. Soon he began to float the idea of a car service for a pool of registered users that relied on limousine licences instead.

This would be more expensive than a regular cab service, but he argued that the benefits of better quality vehicles and a more reliable service would make it worthwhile in the eyes of users. A friend and fellow startup entrepreneur, Kalanick, agreed. They hired some developers and then started touting the idea to investors (often describing it as “AirBNB for taxis”). Uber grew from there.

As the company expanded, this ‘luxury on the cheap’ model sometimes brought Uber into conflict with the existing US taxi industry and individual city regulators. The fact that they were rarely undercutting the existing market helped limit resistance, however.

What eventually shifted Uber into a different gear was the arrival of a threat from abroad – Hailo.

Hailo wars

Founded by Jay Bregman in 2009, Hailo was a way for London’s Black cab trade to combat the inroads private hire firms had been making into their market share. Those firms were starting to use the web and digital technology to make pre-booking much more convenient. Bregman had seen Uber’s app and realised the potential. He created Hailo as a way to help Black Cabs do the same thing.

At this, Hailo was initially successful. Bregman, an American by birth, soon started casting his eyes across the Atlantic at the opportunities to do the same thing there. In March 2012, Bregman announced that Hailo had raised $17m to fund an expansion into the US, where it would attempt to partner with existing cab firms as it had done in London.

Expansion into London had already been on Uber’s radar. They had also been aware of Hailo. Bregman’s announcement, however, turned a potential rival in an overseas market into a direct, domestic threat. Uber’s reaction was swift and aggressive, as was the ‘app war’ which soon erupted in cities such as Boston and New York where both firms had a presence.

One of the crucial effects of the Hailo wars was that they finally settled a long-running argument that had existed over Uber’s direction between its two founders. Camp had continued to insist that Uber offer luxury at a (smallish) premium. Kalanick had argued that it was convenience, at a low cost, that would drive expansion. When Hailo crossed the pond, offering a low-cost service, Kalanick’s viewpoint finally won out based on necessity.

Controlling the debate

As the Washington hearing would show later that year, Kalanick’s victory had enormous consequences – not just in terms of how the service was priced and would work (it would lead to the launch of Uber X, the product with which most users are familiar), but in how Uber would pitch itself to the public.

The approach that Kalanick took in his Washington testimony, of espousing the public need as being the same as Uber’s need, has since become a standard part of Uber’s tactics for selling expansion into new markets. The ability – often correct – to claim that Uber offers a better service at a cheaper price is powerfull selling point, one that Uber have never shied away from pushing.

It’s a simple argument. It is also one that Uber have used to drown out more complex objections from incumbent operators, regulators or politicians in areas into which they’ve expanded. It is also one of the reasons why Uber have continued to push the narrative that they are a technical disruptor when skirting (or sometimes ignoring) existing regulations – because being an innovative startup is ‘sexy’. Being a large company ignoring the rules isn’t.

Back to the licence

Understanding where Uber have come from, and their approach to messaging is critical to understanding the London operator licence debate. Uber may have tried to frame it as a debate about the availability (or otherwise) of the app, but that’s not what this is. It is a regulatory issue between TfL as regulator and ULL as an operator of minicabs.

The decision to cast the debate in this way is undoubtedly deliberate. Uber are aware that their users are not just passengers, but a powerful lobbying group when pointed in the right direction- as long as the message is something they will get behind. Access to the Uber app is a simple message to sell, the need to lighten ULL’s corporate responsibilities is not.

Corporate responsibilities

One of the primary responsibilities of the taxi regulator in most locations is the consideration of passenger safety. This is very much the case in London – both for individual drivers and for operators.

The expectation of drivers is relatively obvious – that they do not break the law, nor commit a crime of any kind. The expectation of operators is a bit more complex – it is not just about ensuring that drivers are adequately checked before they are hired, but also that their activity is effectively monitored while they are working and that any customer complaints are taken seriously and acted upon appropriately.

The nature of that action can vary. The report of a minor offence may warrant only the intervention of the operator themselves or escalation to TfL. It is expected, however, that serious crimes will be dealt with promptly, and reported directly to the police as well.

On 12 April 2017, the Metropolitan Police wrote to TfL expressing a major concern. In the letter, Inspector Neil Bellany claimed that ULL were not reporting serious crimes to them. They cited three specific incidents by way of example.

The first of these related to a ‘road rage’ incident in which the driver had appeared to pull a gun, causing the passenger to flee the scene. Uber dismissed the driver, having determined that the weapon was a pepper spray, not a handgun, but failed to report the incident to the police. They only became aware of it a month later when TfL, as operator, processed ULL’s incident reports.

At this point, the police attempted to investigate (pepper spray is an offensive weapon in the UK) but, the letter indicated, Uber refused to provide more information unless a formal request via the Data Protection Act was submitted.

The other two offences were even more serious, and here it is best simply to quote the letter itself:

The facts are that on the 30 January 2016 a female was sexually assaulted by an Uber driver. From what we can ascertain Uber have spoken to the driver who denied the offence. Uber have continued to employ the driver and have done nothing more. While Uber did not say they would contact the police the victim believed that they would inform the police on her behalf.

On the 10 May 2016 the same driver has committed a second more serious sexual assault against a different passenger Again Uber haven’t said to this victim they would contact the police, but she was, to use her words, ‘strongly under the impression’ that they would.

On the 13 May 2016 Uber have finally acted and dismissed the driver, notifying LTPH Licensing who have passed the information to the MPS.

The second offence of the two was more serious in its nature. Had Uber notified police after the first offence it would be right to assume that the second would have been prevented. It is also worth noting that once Uber supplied police with the victim’s details both have welcomed us contacting them and have fully assisted with the prosecutions. Both cases were charged as sexual assaults and are at court next week for hearing.

Uber hold a position not to report crime on the basis that it may breach the rights of the passenger. When asked what the position would be in the hypothetical case of a driver who commits a serious sexual assault against a passenger they confirmed that they would dismiss the driver and report to TfL, but not inform the police.

The letter concluded by pointing out that these weren’t the only incidents the Metropolitan Police had become aware of. In total, Uber had failed to report six sexual assaults, two public order offences and one assault to the police. This had lead to delays of up to 7 months before they were investigated. Particularly damning, with the public order offences this meant that in both cases the prosecution time limit had passed by the time the police became aware of them.

As the letter concludes:

The significant concern I am raising is that Uber have been made aware of criminal activity and yet haven’t informed the police. Uber are however proactive in reporting lower level document frauds to both the MPS and LTPH. My concern is twofold, firstly it seems they are deciding what to report (less serious matters / less damaging to reputation over serious offences) and secondly by not reporting to police promptly they are allowing situations to develop that clearly affect the safety and security of the public.

The Metropolitan police letter is arguably one of the most important pieces of evidence as to why TfL’s decision not to renew ULL’s licence is the correct one right now. Because one of the most common defences of Uber is that they provide an important service to women and others late at night. In places where minicabs won’t come out, or for people whose personal experience has left them uncomfortable using Black Cabs or other minicab services, Uber offer a safe, trackable alternative.

The reasoning behind that argument is completely and entirely valid. Right now, however, TfL have essentially indicated that they don’t trust ULL to deliver that service. The perception of safety does not match the reality.

Again, it is not about the app.

Greyball

Concerns about vetting and reporting practices in place at ULL may make up the bulk of TfL’s reasons for rejection, but they are not the only ones. There is also the issue of Greyball – a custom piece of software designed by Uber which can provide the ‘real’ Uber map that the user sees on their device with a convincing fake one.

Greyball’s existence was revealed to the world in March 2017 as part of an investigation by the New York Times into Uber’s activities in Portland back in 2014. The paper claimed that knowing that they were breaking the regulations on taxi operation in the city, Uber had accessed user data within its app to identify likely city officials and target them with false information. This ensured that those people were not picked up for rides, in turn hampering attempts by the authorities to police Uber’s activities there.

Initially, Uber denied the accusations. They confirmed that Greyball existed, but insisted that it was only used for promotional purposes, testing and to protect drivers in countries where there was a risk of physical assault.

Nonetheless, the seriousness of the allegations and the evidence presented by the New York Times prompted Portland’s Board of Transport (PBOT) to launch an official investigation into Uber’s activities. That report was completed in April. It was made public at the beginning of September. In it, Portland published evidence – and an admission from Uber itself – that during the period in which it had been illegal for Uber to operate in Portland, they had indeed used it to help drivers avoid taxi inspectors. In Portland’s own words:

Based on this analysis, PBOT has found that when Uber illegally entered the Portland market in December 2014, the company tagged 17 individual rider accounts, 16 of which have been identified as government officials using its Greyball software tool. Uber used Greyball software to intentionally evade PBOT’s officers from December 5 to December 19, 2014 and deny 29 separate ride requests by PBOT enforcement officers.

The report did confirm that, after regulatory changes allowed Uber to enter the market legally, there seemed to be no evidence that Greyball had been used for this purpose again, As the report states, however:

[i]t is important to note that finding no evidence of the use of Greyball or similar software tools after April 2015 does not prove definitively that such tools were not used. It is inherently difficult to prove a negative. In using Greyball, Uber has sullied its own reputation and cast a cloud over the TNC [transportation network company] industry generally. The use of Greyball has only strengthened PBOT’s resolve to operate a robust and effective system of protections for Portland’s TNC customers.

Portland also went one further. They canvassed other transport authorities throughout the US asking whether, in light of the discovery of Greyball, they now felt they had evidence or suspicions that they had been targeted in a similar way. Their conclusions were as follows:

PBOT asked these agencies if they have ever suspected TNCs of using Greyball or any other software programs to block, delay or deter regulators from performing official functions. As shown in figure 3.0 below, seven of the 17 agencies surveyed suspected Greyball use, while four agencies (figure 3.1) stated that they have evidence of such tactics. One agency reported that they only have anecdotal evidence, but felt that drivers took twice as long to show up for regulators during undercover inspections. The other agencies cities believe that their enforcement teams and/or police officers have been blocked from or deceived by the application during enforcement efforts.

Uber are now under investigation by the US Department of Justice for their use of Greyball in the US.

Of all the transport operators in Europe, TfL are arguably the most technically literate. It is hard to see how the potential use of Greyball wouldn’t have raised eyebrows within the organisation so it is not surprising to see it make the list of issues. A regulator is only as good as their ability to regulate, and as the Portland report shows, Uber now have ‘form’ for blocking that ability.

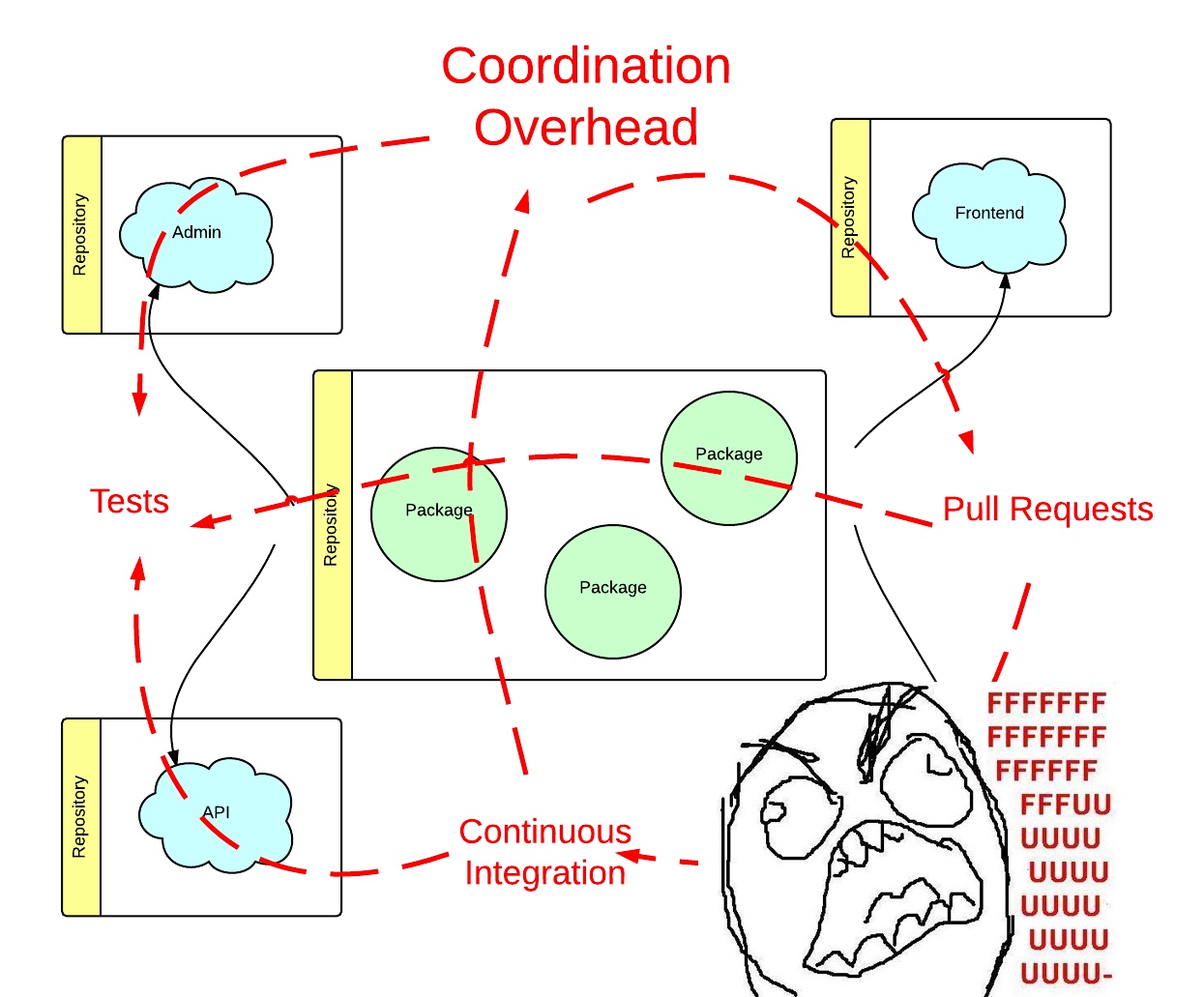

Sources suggest that TfL have requested significant assurances and guarantees that Greyball will not be used in this way in London. The fact that it makes the list of issues, however, suggests that this demand has currently not been met. It is possible this is one of the times when Uber’s setup – multiple companies under one brand – has caused a problem outside of ULL’s control. Uber Global may ultimately be the only organisation able to provide such software assurances.

Perhaps Uber Global is the only organisation able to provide such assurances. Until now, they may simply not have realised just how important it was that they give them.

Understanding the economics

There is still much more to explore on the subject of Uber. Not just Uber London’s particular issues with TfL, but the economics of how they operate and what their future plans might be.

That last part is important because the main element of Uber’s grand narrative – their continued ability to offer low fares – is not as guaranteed a prospect as Londoners (and indeed all users) have been led to believe.

We will explore this more in our next article on the subject but, in the context of the current debate, it is worth bearing something in mind: Uber’s fares do not cover the actual cost of a journey.

Just how large the deficit is varies by territory and – as the firm don’t disclose more financial information than necessary – it is difficult to know what the shortfall per trip is in London itself. In New York, however, where some 2016 numbers are available, it seems that every journey only covers 41% of the costs involved in making it.

Just why Uber do this is something we will explore another time, but for now it is important just to know it is happening. It means that, without significant changes to Uber’s operational model, the company will never make a profit (indeed it currently loses roughly $2bn a year). As one expert in transport economics writes:

Thus there is no basis for assuming Uber is on the same rapid, scale economy driven path to profitability that some digitally-based startups achieved. In fact, Uber would require one of the greatest profit improvements in history just to achieve breakeven.

What it does mean though is that Uber’s cheap fares – sometimes argued as one of the ways in which it provides a ‘social good’ for low-income users – are likely only temporary.

Indeed the only way this won’t be the case is if there is a significant technical change to the way Uber delivers its service. In this regard, Uber has often pulled on its reputation as a ‘startup’ and has pointed to the economies of scale made by companies such as Amazon.

Unfortunately, this simply isn’t how transport works. Up to 80% of the cost of each Uber journey is fixed cost – it goes on the driver, the fuel and the vehicle. This is a cost which scales in a linear fashion. Put simply, the number of books Amazon can fit in a warehouse once it’s been built (and paid for) increases exponentially. The number of passengers Uber can stick in a car does not.

Uber, of course, are aware of this. Indeed it’s why they have quickly become one of the biggest investors in self-driving vehicle technology (and are subject to a lawsuit from Google over the theft of information related to that subject).

Again we will explore this more at a later date, but for now it is worth bearing in mind that behind Uber’s stated concern for their ‘40,000 drivers’ in London should be taken with a considerable pinch of salt. Not only is the active figure likely closer to 25,000 (based on Uber’s own growth forecasts from last year), but they would also quite like to get rid of them anyway – or at the very least squeeze their income further in order to push that cost-per-journey figure closer to being in the black.

Bullying a bully

None of these issues with Uber’s operational are likely exclusive to London. Which begs the question – why have TfL said ‘no’ when practically everyone else has said ‘yes’?

To a large extent, the extreme public backlash this news received, and the size of Uber’s petition provide the answer – because Uber are a bully. Unfortunately for them, TfL can be an even bigger one.

TfL aren’t just a transport authority. They are arguably the largest transport authority in the world. Indeed legislatively speaking TfL aren’t really a transport authority at all (at least not in the way most of the world understands the term). TfL are constituted as a local authority. One with an operating budget of over £10bn a year. They also have a deep reserve of expertise – both legal and technical.

Nothing to divide

To make things worse for Uber, TfL aren’t accountable to an electorate. They serve, and act, at the pleasure of just one person – the Mayor of London, the third most powerful directly elected official in Europe (behind the French and Russian presidents).

This is a problem for Uber. In almost every other jurisdiction they have operated in, Uber have been able to turn their users into a political weapon. That weapon has then been turned on whatever political weak point exists within the legislature of the state or city it is attempting to enter, using popularism to get regulations changed to meet Uber’s needs.

The situation in London is practically unique, simply because there is only one weak point that can be exploited – that which exists between TfL and the Mayor.

Just how much direct power the Mayor of London exercises over TfL is one of the themes that has been emerging from our transcripts of the interviews conducted for the Garden Bridge Report. To quote the current Transport Commissioner, Mike Brown, in conversation with Margaret Hodge, MP:

Margaret Hodge: But it’s your money.

Mike Brown: Yes, I know but the Mayor can do what he wants as the Chair of the TfL Board.

MH: Without accountability to the Board?

MB: Yes and Mayoral Directions are — the Mayor is actually extremely powerful in terms of Mayoral Directions. He or she can do whatever they want.

MH: What, to whatever upper limit you want?

Andy Brown: That’s right, I think, yeah.

MB: Yeah, pretty much is. Yeah — so arguably it’s more direct financial authority than even a Prime Minister would have, for example.

As long as the TfL and the Mayor, Sadiq Khan, remain in lockstep on the licence issue, therefore, Uber’s most powerful weapon has no ammunition. 500,000 signatures mean nothing to TfL if the organisation has the backing of the Mayor and they are confident of a victory in court.

It is also worth noting that all TfL really wants Uber to do is comply with the rules. Despite the image that has been pushed in some sections of the media, TfL has not suddenly become the champion of the embattled London cabbie. TfL has always seen itself as the taxi industry’s regulator, not as the Black Cab’s saviour. This was true back in 2015 when the Uber debate really erupted in earnest and it is still true now.

Indeed if TfL have any kind of ulterior motive for their actions, it is simply that they dislike the impact Uber are having on congestion within the capital, and the effect this congestion then has on the bus network. Indeed Uber would do well to remember the last time a minicab operator made the mistake of making it harder for TfL’s buses to run on time.

That the Mayor was prepared to go so public in his support for such an unpopular action should also serve as a warning for Uber.

When it comes to legal action, TfL are risk-averse in the extreme – there is a reason they have never sued the US Embassy over unpaid Congestion Charge fees. The current Mayor is even more so.

Whilst his field is human rights rather than transport, Khan is a lawyer himself and by all accounts a good one. GQ’s Politician of the Year is also an extremely shrewd political operator. It is unlikely that he would have lent his support to TfL on this subject unless he knew it was far more likely to make him look like a statesman who stands up to multinationals, than a man who steals cheap travel from the electorate.

Ultimately, the next few days (and beyond) will likely come to define the relationship between London and Uber. Indeed sources suggest that Uber have already begun to make conciliatory noises to TfL, as the seriousness of the situation bubbles up beyond ULL and UBV to Uber Global itself. Only time will tell if this is true.

In the meantime, however, the next time you see a link to a petition or someone raging about this decision being ‘anti-innovation’, remember Greyball. Remember the Metropolitan Police letter. Remember that this is about holding ULL, as a company, to the same set of standards to which every other mini-cab operator in London already complies.

Most of all though remember: it is not about the app.

In the next part of this series, we will look at the economics of Uber, their internal culture, impact on roadspace and relationship with their drivers.

The post Understanding Uber: It’s Not About The App appeared first on London Reconnections.